인사말

건강한 삶과 행복,환한 웃음으로 좋은벗이 되겠습니다

What Can you Do About Deepseek Right Now

페이지 정보

작성자 Graciela 작성일25-02-01 04:59 조회10회 댓글0건본문

Alternatively, you possibly can obtain the DeepSeek app for iOS or Android, and use the chatbot on your smartphone. Using DeepSeek-V2 Base/Chat models is subject to the Model License. DeepSeek was the first company to publicly match OpenAI, which earlier this year launched the o1 class of models which use the same RL method - a further sign of how sophisticated DeepSeek is. The corporate prices its products and services nicely beneath market value - and offers others away for free deepseek. The fantastic-tuning job relied on a rare dataset he’d painstakingly gathered over months - a compilation of interviews psychiatrists had accomplished with patients with psychosis, in addition to interviews those same psychiatrists had executed with AI systems. I take pleasure in offering models and helping folks, and would love to be able to spend even more time doing it, as well as increasing into new tasks like advantageous tuning/training. Why this issues - symptoms of success: Stuff like Fire-Flyer 2 is a symptom of a startup that has been building subtle infrastructure and training models for many years. When the final human driver finally retires, we are able to update the infrastructure for machines with cognition at kilobits/s. Read more: Sapiens: Foundation for Human Vision Models (arXiv).

Alternatively, you possibly can obtain the DeepSeek app for iOS or Android, and use the chatbot on your smartphone. Using DeepSeek-V2 Base/Chat models is subject to the Model License. DeepSeek was the first company to publicly match OpenAI, which earlier this year launched the o1 class of models which use the same RL method - a further sign of how sophisticated DeepSeek is. The corporate prices its products and services nicely beneath market value - and offers others away for free deepseek. The fantastic-tuning job relied on a rare dataset he’d painstakingly gathered over months - a compilation of interviews psychiatrists had accomplished with patients with psychosis, in addition to interviews those same psychiatrists had executed with AI systems. I take pleasure in offering models and helping folks, and would love to be able to spend even more time doing it, as well as increasing into new tasks like advantageous tuning/training. Why this issues - symptoms of success: Stuff like Fire-Flyer 2 is a symptom of a startup that has been building subtle infrastructure and training models for many years. When the final human driver finally retires, we are able to update the infrastructure for machines with cognition at kilobits/s. Read more: Sapiens: Foundation for Human Vision Models (arXiv).

Read extra: The Unbearable Slowness of Being (arXiv). For extended sequence fashions - eg 8K, 16K, 32K - the mandatory RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. The mannequin read psychology texts and built software for administering personality tests. There was a sort of ineffable spark creeping into it - for lack of a greater word, personality. There was a tangible curiosity coming off of it - a tendency in the direction of experimentation. He knew the info wasn’t in some other systems because the journals it came from hadn’t been consumed into the AI ecosystem - there was no hint of them in any of the coaching sets he was conscious of, and basic knowledge probes on publicly deployed fashions didn’t seem to indicate familiarity. Of course he knew that folks may get their licenses revoked - but that was for terrorists and criminals and different unhealthy types. But in his thoughts he wondered if he may actually be so confident that nothing dangerous would occur to him. And in it he thought he could see the beginnings of something with an edge - a mind discovering itself via its own textual outputs, learning that it was separate to the world it was being fed.

Read extra: The Unbearable Slowness of Being (arXiv). For extended sequence fashions - eg 8K, 16K, 32K - the mandatory RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. The mannequin read psychology texts and built software for administering personality tests. There was a sort of ineffable spark creeping into it - for lack of a greater word, personality. There was a tangible curiosity coming off of it - a tendency in the direction of experimentation. He knew the info wasn’t in some other systems because the journals it came from hadn’t been consumed into the AI ecosystem - there was no hint of them in any of the coaching sets he was conscious of, and basic knowledge probes on publicly deployed fashions didn’t seem to indicate familiarity. Of course he knew that folks may get their licenses revoked - but that was for terrorists and criminals and different unhealthy types. But in his thoughts he wondered if he may actually be so confident that nothing dangerous would occur to him. And in it he thought he could see the beginnings of something with an edge - a mind discovering itself via its own textual outputs, learning that it was separate to the world it was being fed.

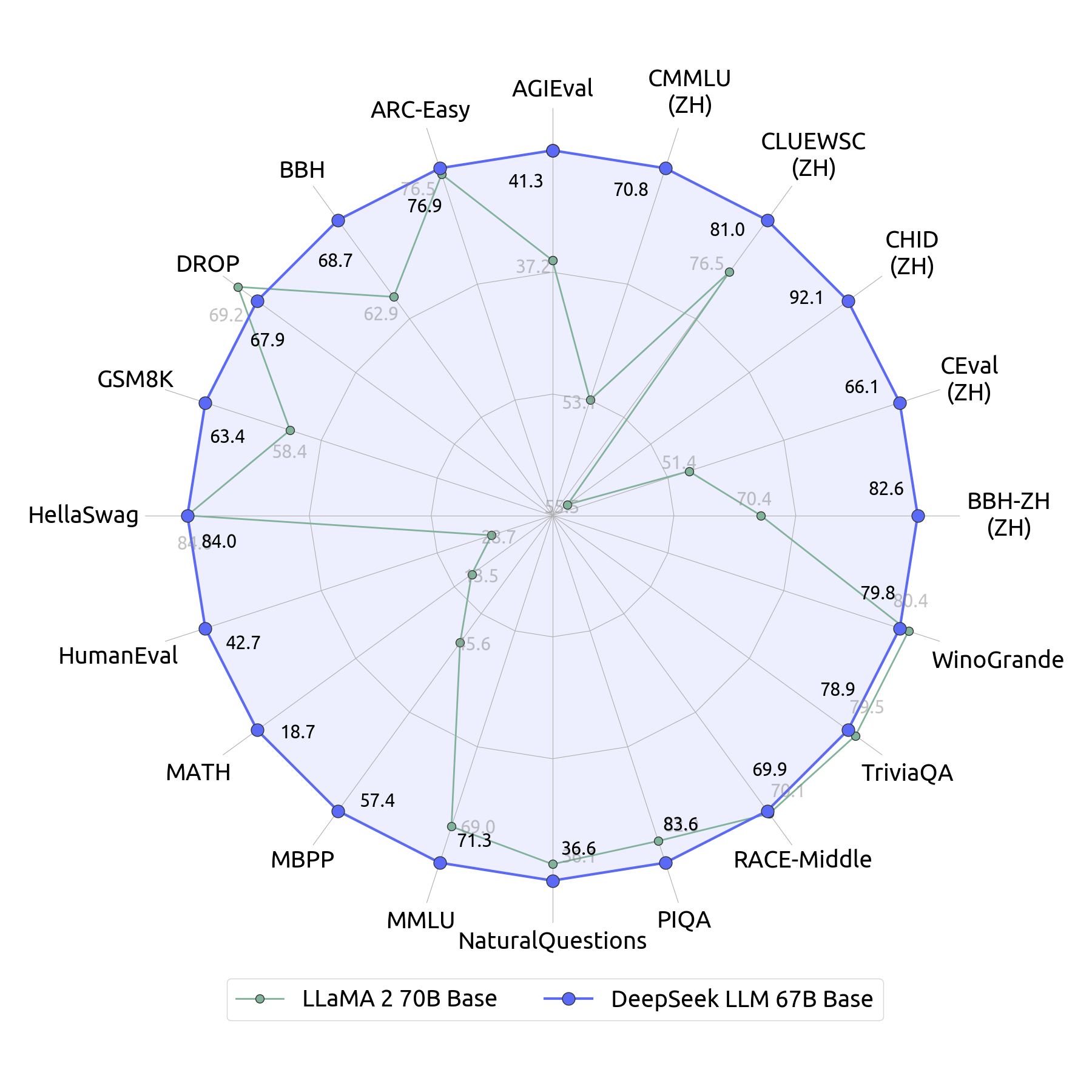

We’re thrilled to share our progress with the community and see the hole between open and closed models narrowing. "We estimate that in comparison with the best international requirements, even the most effective home efforts face a few twofold gap when it comes to model construction and coaching dynamics," Wenfeng says. Additionally, there’s about a twofold gap in data effectivity, that means we need twice the coaching knowledge and computing energy to achieve comparable outcomes. Combined, this requires four times the computing energy. "This means we need twice the computing energy to attain the same outcomes. "This run presents a loss curve and convergence price that meets or exceeds centralized training," Nous writes. Track the NOUS run here (Nous DisTro dashboard). Take a look at Andrew Critch’s post here (Twitter). There’s no simple answer to any of this - everyone (myself included) needs to determine their own morality and approach here. John Muir, the Californian naturist, was mentioned to have let out a gasp when he first noticed the Yosemite valley, seeing unprecedentedly dense and love-filled life in its stone and timber and wildlife. K), a lower sequence length could have for use. "The sensible information we now have accrued could prove useful for each industrial and academic sectors.

Researchers at Tsinghua University have simulated a hospital, crammed it with LLM-powered brokers pretending to be patients and medical workers, then shown that such a simulation can be used to improve the actual-world efficiency of LLMs on medical check exams… DeepSeek's first-generation of reasoning fashions with comparable performance to OpenAI-o1, including six dense models distilled from DeepSeek-R1 based on Llama and Qwen. AI CEO, Elon Musk, merely went online and started trolling DeepSeek’s efficiency claims. DeepSeek’s system: The system is named Fire-Flyer 2 and is a hardware and software system for doing large-scale AI coaching. As DeepSeek’s founder mentioned, the only problem remaining is compute. If we get it mistaken, we’re going to be dealing with inequality on steroids - a small caste of individuals shall be getting a vast quantity performed, aided by ghostly superintelligences that work on their behalf, whereas a larger set of people watch the success of others and ask ‘why not me? The success of the company's A.I.

댓글목록

등록된 댓글이 없습니다.