인사말

건강한 삶과 행복,환한 웃음으로 좋은벗이 되겠습니다

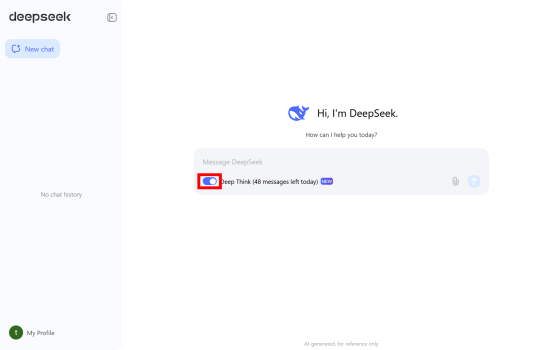

How To turn Deepseek Into Success

페이지 정보

작성자 Elsa 작성일25-02-17 14:28 조회9회 댓글0건본문

Efficient Resource Use: With lower than 6% of its parameters lively at a time, DeepSeek considerably lowers computational costs. Despite its excellent performance in key benchmarks, DeepSeek-V3 requires only 2.788 million H800 GPU hours for its full coaching and about $5.6 million in training prices. 1-mini also prices more than gpt-4o. ChatGPT has discovered recognition handling Python, Java, and many more programming languages. DeepSeek-V3 doubtless picked up textual content generated by ChatGPT throughout its coaching, and someplace along the best way, it started associating itself with the identify. With DeepSeek-V3, the most recent mannequin, customers experience sooner responses and improved textual content coherence in comparison with earlier AI models. Recently, DeepSeek announced DeepSeek-V3, a Mixture-of-Experts (MoE) giant language mannequin with 671 billion total parameters, with 37 billion activated for each token. I hope labs iron out the wrinkles in scaling model size. Remember, inference scaling endows today’s fashions with tomorrow’s capabilities. But if we do end up scaling mannequin size to deal with these modifications, what was the purpose of inference compute scaling again?

You can obtain the Deepseek free-V3 model on GitHub and HuggingFace. DeepSeek-V3 boasts 671 billion parameters, with 37 billion activated per token, and might handle context lengths up to 128,000 tokens. DeepSeek Ai Chat-V3 can also be extremely efficient in inference. You won't see inference performance scale for those who can’t gather near-unlimited follow examples for o1. If you want sooner AI progress, you want inference to be a 1:1 alternative for coaching. Whether or not they generalize past their RL coaching is a trillion-dollar question. Gives you a tough idea of some of their coaching information distribution. The reason for this identification confusion seems to return right down to training data. This mannequin is recommended for customers in search of the best possible performance who are snug sharing their knowledge externally and utilizing models educated on any publicly available code. It was educated on 14.Eight trillion tokens over roughly two months, utilizing 2.788 million H800 GPU hours, at a price of about $5.6 million. We can now benchmark any Ollama mannequin and DevQualityEval by either utilizing an existing Ollama server (on the default port) or by beginning one on the fly routinely. However, for high-end and actual-time processing, it’s higher to have a GPU-powered server or cloud-based infrastructure.

This method has, for many causes, led some to consider that fast advancements may scale back the demand for high-end GPUs, impacting corporations like Nvidia. 1. OpenAI did not release scores for o1-mini, which suggests they could also be worse than o1-preview. OpenAI admits that they trained o1 on domains with straightforward verification however hope reasoners generalize to all domains. A simple method to check how reasoners perform on domains without simple verification is benchmarks. The long-term research objective is to develop artificial normal intelligence to revolutionize the way computer systems interact with humans and handle advanced duties. Last month, Wiz Research said it had identified a DeepSeek database containing chat history, secret keys, backend particulars and different sensitive information on the internet. "There’s little diversification profit to owning each the S&P 500 and (Nasdaq 100)," wrote Jessica Rabe, co-founding father of DataTrek Research. For comparability, the equal open-source Llama three 405B mannequin requires 30.Eight million GPU hours for coaching. This is significantly lower than the $100 million spent on training OpenAI's GPT-4. 1-model reasoners don't meaningfully generalize beyond their coaching. DeepSeek-V3 is price-efficient as a result of help of FP8 coaching and deep engineering optimizations.

This method has, for many causes, led some to consider that fast advancements may scale back the demand for high-end GPUs, impacting corporations like Nvidia. 1. OpenAI did not release scores for o1-mini, which suggests they could also be worse than o1-preview. OpenAI admits that they trained o1 on domains with straightforward verification however hope reasoners generalize to all domains. A simple method to check how reasoners perform on domains without simple verification is benchmarks. The long-term research objective is to develop artificial normal intelligence to revolutionize the way computer systems interact with humans and handle advanced duties. Last month, Wiz Research said it had identified a DeepSeek database containing chat history, secret keys, backend particulars and different sensitive information on the internet. "There’s little diversification profit to owning each the S&P 500 and (Nasdaq 100)," wrote Jessica Rabe, co-founding father of DataTrek Research. For comparability, the equal open-source Llama three 405B mannequin requires 30.Eight million GPU hours for coaching. This is significantly lower than the $100 million spent on training OpenAI's GPT-4. 1-model reasoners don't meaningfully generalize beyond their coaching. DeepSeek-V3 is price-efficient as a result of help of FP8 coaching and deep engineering optimizations.

With its impressive efficiency and affordability, Free DeepSeek-V3 might democratize entry to superior AI fashions. This model has made headlines for its spectacular performance and cost efficiency. MoE allows the model to specialize in different downside domains while maintaining overall effectivity. In 5 out of eight generations, DeepSeekV3 claims to be ChatGPT (v4), while claiming to be DeepSeekV3 only 3 times. Despite its capabilities, customers have noticed an odd habits: DeepSeek-V3 typically claims to be ChatGPT. It started with ChatGPT taking over the web, and now we’ve received names like Gemini, Claude, and the newest contender, DeepSeek-V3. Some critique on reasoning fashions like o1 (by OpenAI) and r1 (by Deepseek). This pricing is nearly one-tenth of what OpenAI and different main AI firms at the moment charge for their flagship frontier models. How did it go from a quant trader’s passion venture to some of the talked-about models in the AI area?

댓글목록

등록된 댓글이 없습니다.