인사말

건강한 삶과 행복,환한 웃음으로 좋은벗이 되겠습니다

Six Strategies Of Deepseek Chatgpt Domination

페이지 정보

작성자 Connie Figueroa 작성일25-03-09 16:24 조회5회 댓글0건본문

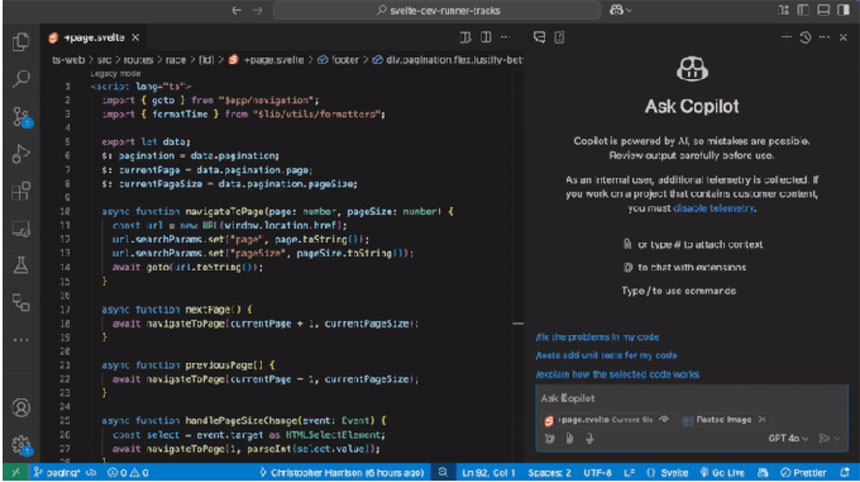

In mainland China, the ruling Chinese Communist Party has ultimate authority over what information and images can and cannot be shown - part of their iron-fisted efforts to take care of control over society and suppress all types of dissent. Bloomberg notes that whereas the prohibition stays in place, Defense Department personnel can use DeepSeek’s AI by means of Ask Sage, an authorized platform that doesn’t straight connect with Chinese servers. While business models just barely outclass local models, the results are extraordinarily close. At first we started evaluating widespread small code models, but as new fashions stored appearing we couldn’t resist including DeepSeek Coder V2 Light and Mistrals’ Codestral. Once AI assistants added support for native code models, we instantly needed to judge how nicely they work. However, whereas these models are helpful, especially for prototyping, we’d still prefer to caution Solidity developers from being too reliant on AI assistants. The local models we tested are specifically educated for code completion, whereas the massive industrial models are skilled for instruction following. We wanted to improve Solidity assist in giant language code models. We are open to including assist to other AI-enabled code assistants; please contact us to see what we are able to do.

In mainland China, the ruling Chinese Communist Party has ultimate authority over what information and images can and cannot be shown - part of their iron-fisted efforts to take care of control over society and suppress all types of dissent. Bloomberg notes that whereas the prohibition stays in place, Defense Department personnel can use DeepSeek’s AI by means of Ask Sage, an authorized platform that doesn’t straight connect with Chinese servers. While business models just barely outclass local models, the results are extraordinarily close. At first we started evaluating widespread small code models, but as new fashions stored appearing we couldn’t resist including DeepSeek Coder V2 Light and Mistrals’ Codestral. Once AI assistants added support for native code models, we instantly needed to judge how nicely they work. However, whereas these models are helpful, especially for prototyping, we’d still prefer to caution Solidity developers from being too reliant on AI assistants. The local models we tested are specifically educated for code completion, whereas the massive industrial models are skilled for instruction following. We wanted to improve Solidity assist in giant language code models. We are open to including assist to other AI-enabled code assistants; please contact us to see what we are able to do.

Almost undoubtedly. I hate to see a machine take any individual's job (particularly if it's one I might want). The accessible data sets are also often of poor high quality; we looked at one open-source training set, and it included more junk with the extension .sol than bona fide Solidity code. Writing a very good evaluation could be very troublesome, and writing a perfect one is unimaginable. Solidity is current in approximately zero code evaluation benchmarks (even MultiPL, which includes 22 languages, is lacking Solidity). Read on for a extra detailed evaluation and our methodology. More about CompChomper, including technical details of our evaluation, might be discovered throughout the CompChomper supply code and documentation. CompChomper makes it easy to guage LLMs for code completion on tasks you care about. Local fashions are additionally better than the large business fashions for certain sorts of code completion tasks. The open-source DeepSeek-V3 is expected to foster advancements in coding-associated engineering duties. Full weight fashions (16-bit floats) have been served regionally through HuggingFace Transformers to judge raw model capability. These models are what developers are likely to truly use, and measuring completely different quantizations helps us perceive the influence of model weight quantization.

Almost undoubtedly. I hate to see a machine take any individual's job (particularly if it's one I might want). The accessible data sets are also often of poor high quality; we looked at one open-source training set, and it included more junk with the extension .sol than bona fide Solidity code. Writing a very good evaluation could be very troublesome, and writing a perfect one is unimaginable. Solidity is current in approximately zero code evaluation benchmarks (even MultiPL, which includes 22 languages, is lacking Solidity). Read on for a extra detailed evaluation and our methodology. More about CompChomper, including technical details of our evaluation, might be discovered throughout the CompChomper supply code and documentation. CompChomper makes it easy to guage LLMs for code completion on tasks you care about. Local fashions are additionally better than the large business fashions for certain sorts of code completion tasks. The open-source DeepSeek-V3 is expected to foster advancements in coding-associated engineering duties. Full weight fashions (16-bit floats) have been served regionally through HuggingFace Transformers to judge raw model capability. These models are what developers are likely to truly use, and measuring completely different quantizations helps us perceive the influence of model weight quantization.

A bigger mannequin quantized to 4-bit quantization is best at code completion than a smaller mannequin of the identical selection. We also realized that for this activity, model dimension issues greater than quantization stage, with bigger however more quantized fashions virtually all the time beating smaller but much less quantized alternate options. The entire line completion benchmark measures how precisely a mannequin completes a whole line of code, given the prior line and the next line. Figure 2: Partial line completion results from well-liked coding LLMs. Reports recommend that DeepSeek R1 can be up to twice as quick as ChatGPT for advanced duties, particularly in areas like coding and mathematical computations. Figure 4: Full line completion results from widespread coding LLMs. Although CompChomper has only been examined in opposition to Solidity code, it is essentially language independent and might be simply repurposed to measure completion accuracy of different programming languages. CompChomper gives the infrastructure for preprocessing, working a number of LLMs (regionally or in the cloud via Modal Labs), and scoring. It could also be tempting to have a look at our outcomes and conclude that LLMs can generate good Solidity. However, counting "just" strains of protection is misleading since a line can have a number of statements, i.e. protection objects have to be very granular for an excellent evaluation.

However, before we will improve, we should first measure. You specify which git repositories to make use of as a dataset and what kind of completion model you want to measure. The perfect performers are variants of DeepSeek coder; the worst are variants of CodeLlama, which has clearly not been trained on Solidity in any respect, and CodeGemma by way of Ollama, which appears to have some sort of catastrophic failure when run that means. Led by Free DeepSeek r1 founder Liang Wenfeng, the group is a pool of recent expertise. When DeepSeek-V2 was launched in June 2024, in accordance with founder Liang Wenfeng, it touched off a value struggle with different Chinese Big Tech, corresponding to ByteDance, Alibaba, Baidu, Tencent, in addition to bigger, more effectively-funded AI startups, like Zhipu AI. This is the reason we suggest thorough unit assessments, using automated testing tools like Slither, Echidna, or Medusa-and, after all, a paid safety audit from Trail of Bits. This work additionally required an upstream contribution for Solidity help to tree-sitter-wasm, to profit other development tools that use tree-sitter.

When you have virtually any inquiries with regards to exactly where and how to use deepseek chat, you'll be able to contact us at the web site.

댓글목록

등록된 댓글이 없습니다.